Improvisational System with Sample Feedback, 2021.

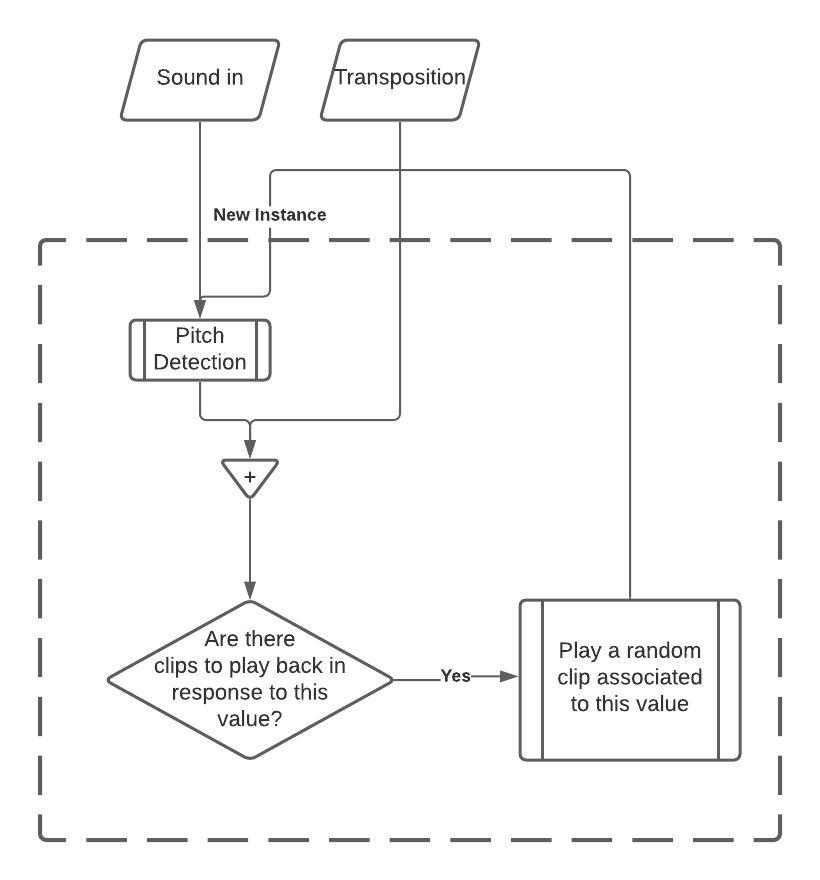

When improvising, I sometimes play a musical phrase in response to a note. In the project, I designed a MaxForLive patch that improvises in this fashion.

First, musical samples are recorded and labeled with the note after which they should be played.

Then, sofware detects the notes you are playing, and plays back recorded audio as appropriate. This is fed back into the system—and even more audio is played back in response. See the system diagram for more detail.

This leads to host of possible sonic output. I have included audio examples from a recent solo acoustic guitar improvisation.

String Generation with Multi-Scale Self-Similarity, 2020-21.

In this project, I aim to generate sequences of tokens that are self-similar on multiple scales. The motivation here is in the formal structure of music—which is self-similar on many scales (subdivision, beat, bar, phrase, section, etc.).

These tokens can be all sorts of things. A sequence of notes would make a melody. More abstract encodings could be used to make things like polyphonic drum patterns and visual imagery.

First, an input sequence is chosen. To generate tokens after it, a metric (from combinatorics or DSP) is used to measure how 'self-similar' the sequence is on each of a set of frequencies.

We choose the frequency upon which the sequence is least 'self-similar'. The next token is chosen to maximize the 'self-similarity' of the sequence on that frequency. This can be done both deterministically and stochastically (for instance, using Markov Chains).

I have provided some example output in the SoundCloud playlist. "Sine1" and "Song1" were generated real-time from singing over a drone. The rest of the examples were generated from drum patterns.

Microtonal Modulation, 2020-21.

In a number of muscial traditions, techniques are established for shifting between sets of pitches, key centers, or modes (e.g., Carnātic grahabēdham and ragamalika, Western modulation, etc.).

As official MIDI specifications do not support microtonal music, software tools using MIDI rarely do either.

This MIDI effect plugin allows for real-time transposition and modulation in any pitchset. It provides support for .scl and .kbm files, and is compatible with any synth that supports MIDI MPE. Prototyped in Max/MSP and developed in C++ using JUCE.

After some changes to the interface, I plan on making this plugin available for free download. Find this project on Github.

Listen to the examples to hear some sounds I've made with it.

eye_grid, 2020.

This project is a collaboration with Kiera Saltz, made for Eli Stine's Fall 2020 Audiovisual Composition Class at Oberlin College.

To me, eye_grid is focused on the joys and innate loneliness that come with conciesness. Existence is beautiful— but because we are all in separate minds, we perceive the world differently and can never exactly share that which we experience.

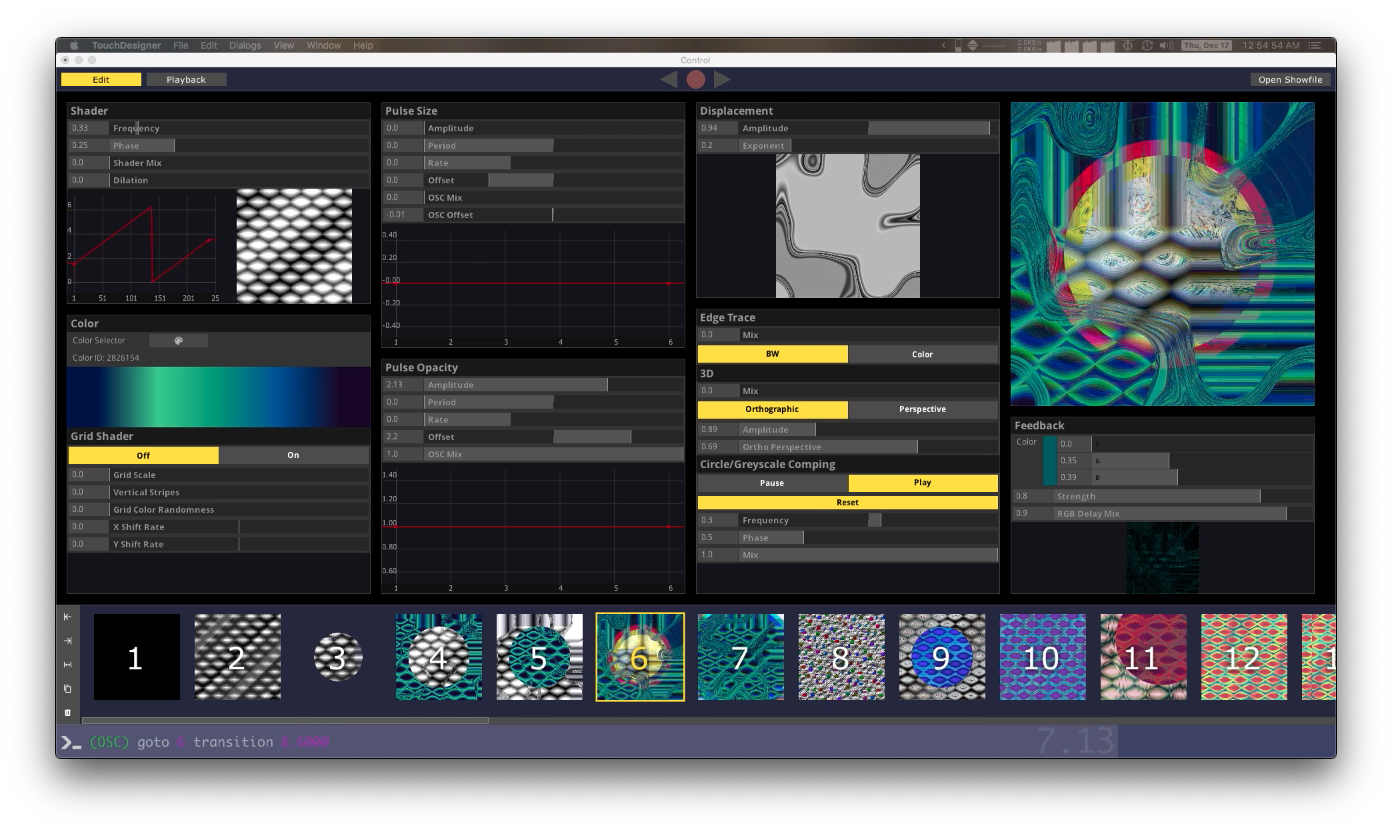

Much of the visual language is composed of simple grids, coecentric shapes, and centered circles. These basic visual elements are made with GLSL shaders. Post processing is handeled in TouchDesigner. We designed a GUI (see image below) which functions very similarly to a lighting console. It allows for easy scene and parameter changes.

The project is set up for live performance with Ableton Live and using OSC messages to communicate with the visual system.

While the audiovisual system is finished, the compositional elements are a rough draft. Some editing would likely take place before live performance.

Visual Art

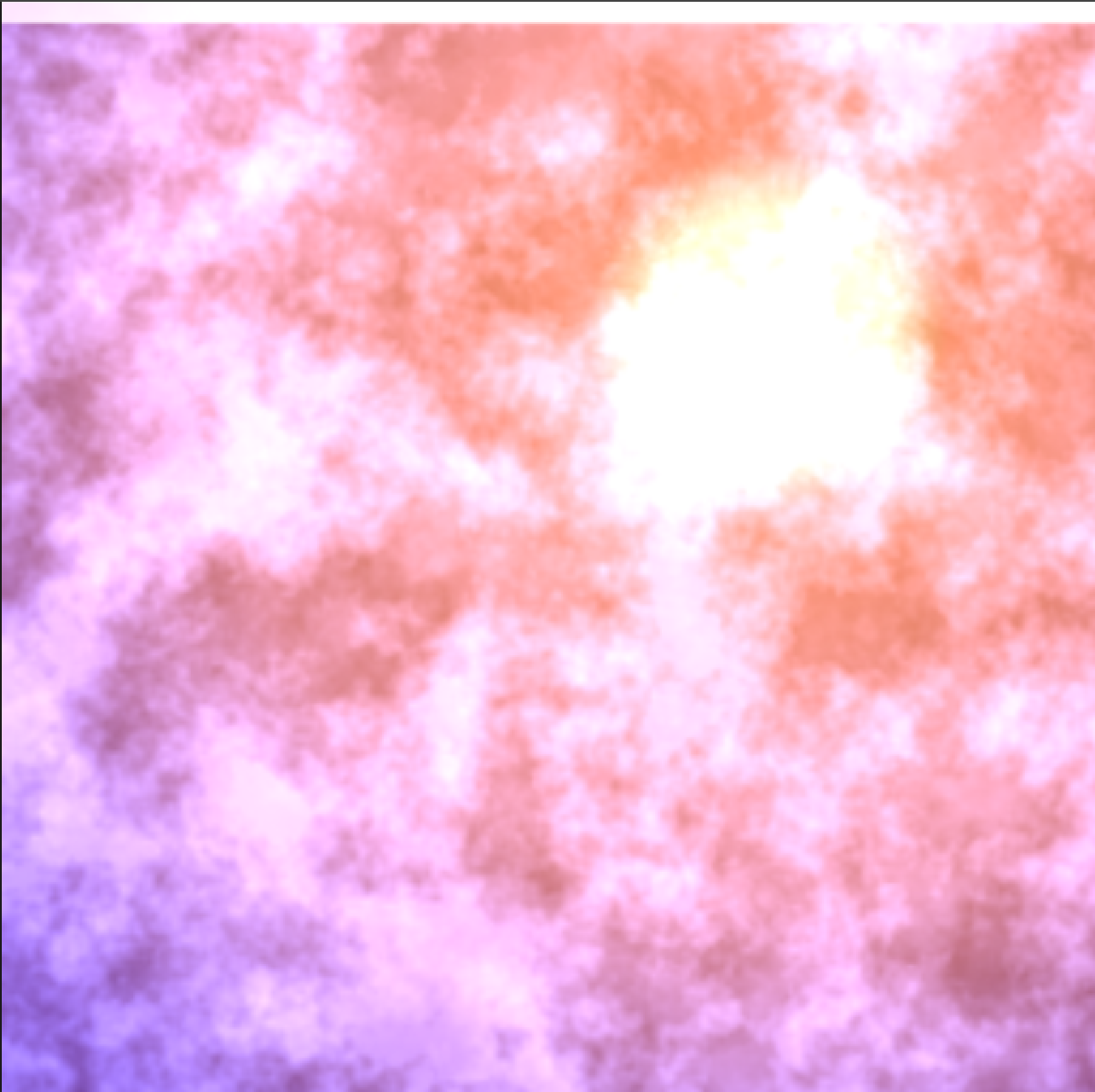

clouds 2020.

TouchDesigner, GLSL shaders.

A yellow circle (the Sun) is drawn on a blue background. A noise function simulates the density of cloud cover. A shader simulates the Sun's diffraction through clouds.

circles_grow 2021.

Processing.

Circles are added randomly. Circles grow. When a circle is growing, if it comes in contact with another growing circle, both circles stop growing.

Untitled 2019.

Digital, Python, TensorFlow.

This is an example of neural style transfer. Neural style transfer is a technique that uses Neural Networks to render an image (here, an original digital image) in the style of another image (here, Monet's Impression, Sunrise).

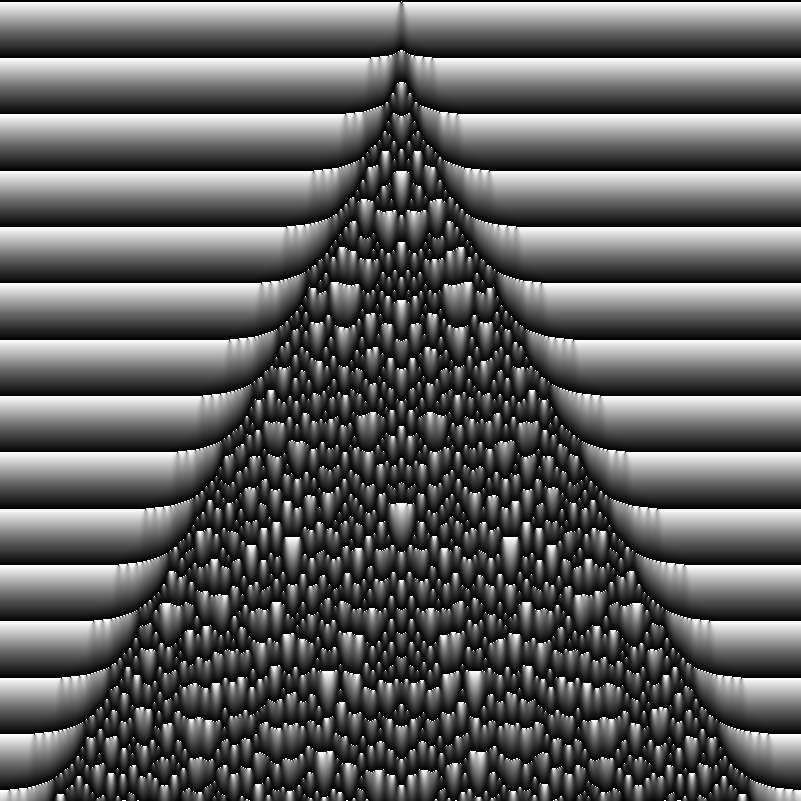

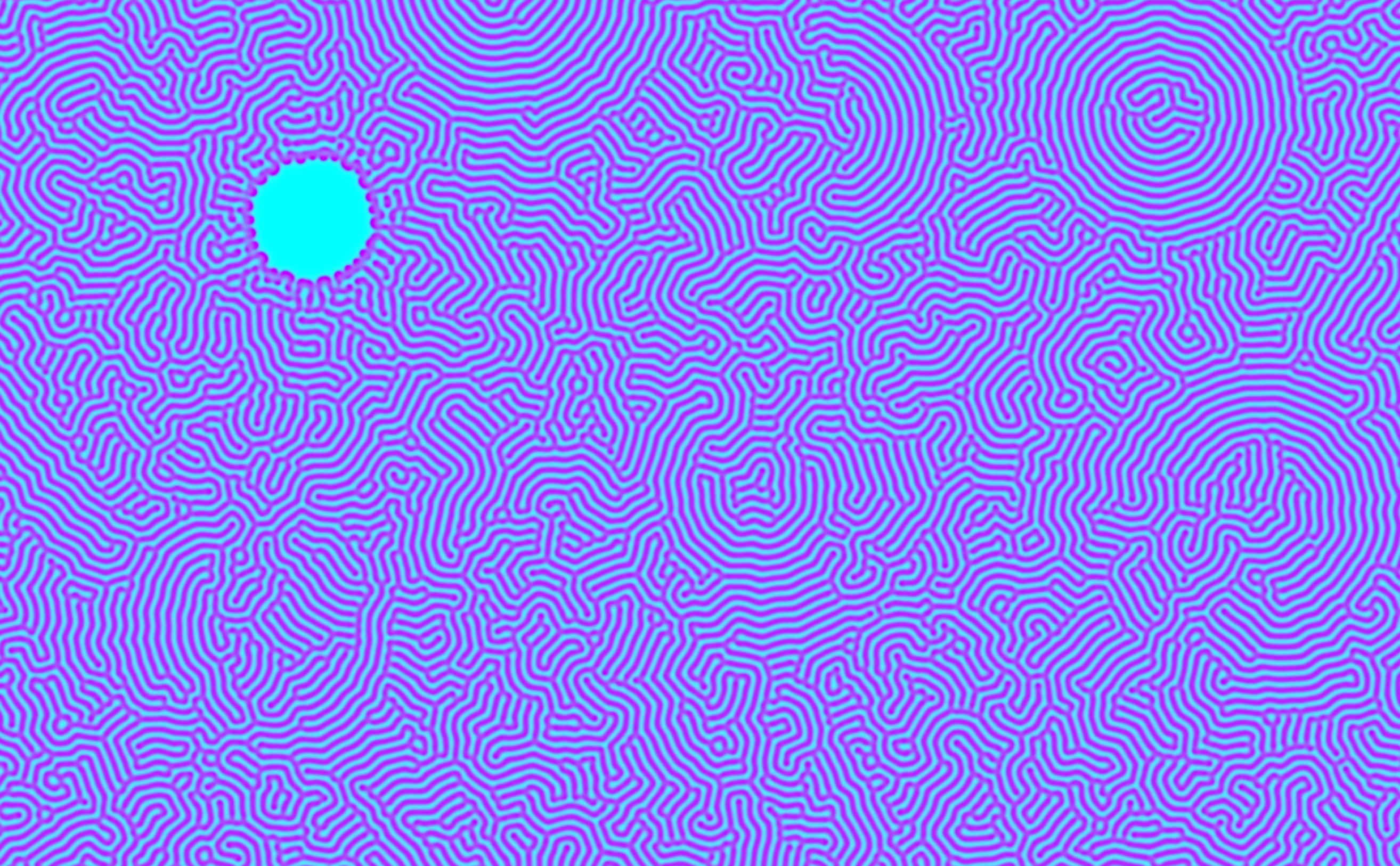

Multiscale Turing Pattern 2020.

OPENRNDR, GLSL shaders.

A turing pattern forms when two liquid chemicals react with one another while diffusing. These patterns can look similar to stripes on a Zebra.

This is my implementation of Johnathan McCabe's Multiscale Turing Patterns. The idea here is to run many Turing Patterns at one time, with multiple spatial scales (stripe widths). At each step, we calculate which spatial scale has least variability. We use the Turing pattern at that scale to maximize variability across scales.

This idea was part of the inspiriation for my project on generating self-similar tokens !

Untitled 2021.

Processing, GLSL shader.

This is a one-dimensional continuous cellular automata. The brightness of each pixel (b) is dependant on the brightness of the 3 pixels immediately above it (p1, p2, p3).

Here,

b = fract( (p1+p2+p3) / 3.0099876 + 0.9837703).

hex_grid 2019.

GLSL shader.

growth 2020.

Digital.

dot_grid 2019.

GLSL shader.

An exploration of Moiré Patterns.

animated_wallpaper 2020.

GLSL shader.

Turing Pattern 2020.

OPENRNDR, GLSL shader.

When two liquid chemicals react with one another under diffusion, a Turing pattern like this one can be formed.

Musical Sketchbook

I make music! Recently, I have been exploring polyrhythmic grooves and thickly contrapuntal textures.

Here is a collection of some sounds I am working on!

willowweiner.com, 2019-21.

I started coding this site in May 2019. I came into the project with no front-end programming experience, and it is certaintly a work in progress.

Coded from scratch (albiet with the help of many youtube tutorials), the project has given me a decent understanding of HTML and CSS, and the shallowest of dives into what can be done with JavaScript.